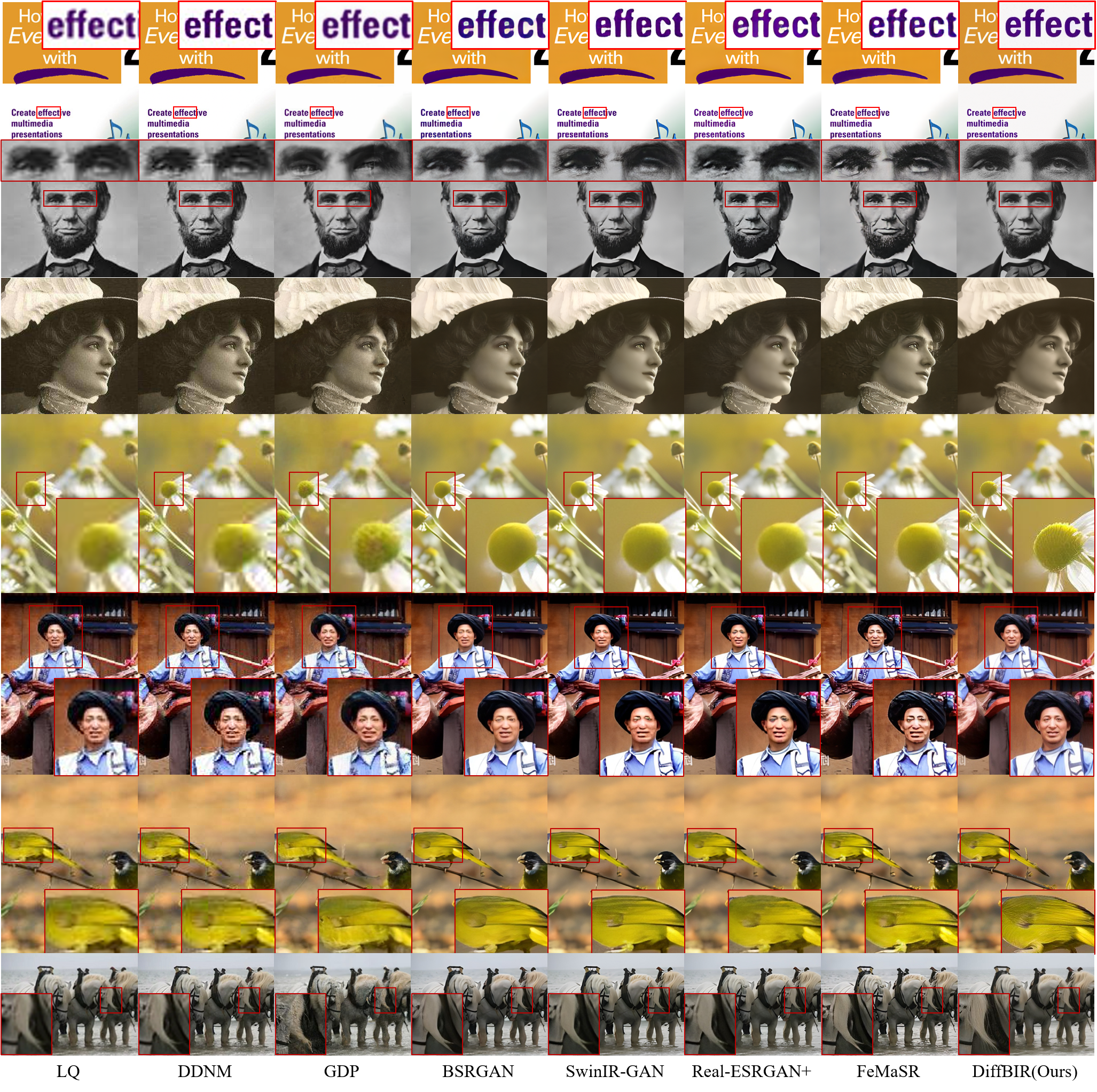

Comparisons of DiffBIR and state-of-the-art BSR/BFR methods on real-world images. Compared to BSR methods, DiffBIR is more effective to (1) generate natural textures; (2) reconstruct semantic regions; (3) not erase small details; (4) overcome severe cases. Compared to BFR methods, DiffBIR can (1) handle occlusion cases; (2) obtain satisfactory restoration beyond facial areas (e.g., headwear, earrings).